import pandas as pd

import numpy as np

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Dropout, BatchNormalization

from tensorflow.keras.optimizers import Adam, RMSprop, SGD, Adagrad, Adadelta, Adamax, Nadam

from tensorflow.keras.callbacks import EarlyStopping, ModelCheckpoint, ReduceLROnPlateau

from tensorflow.keras.losses import MeanSquaredLogarithmicError, Huber, LogCosh, MeanSquaredError, MeanAbsoluteError

from sklearn.model_selection import KFold

import tkinter as tk

from tkinter import messagebox, ttk

from tkcalendar import DateEntry

import os

import random

import matplotlib.pyplot as plt

import requests

import io # Importa il modulo io

# Aggiunta di colorama per il testo colorato nel terminale

import colorama

from colorama import Fore, Style

colorama.init()

# Imposta i semi casuali per garantire riproducibilità

def set_seed(seed_value=42):

os.environ['PYTHONHASHSEED'] = str(seed_value)

random.seed(seed_value)

np.random.seed(seed_value)

tf.random.set_seed(seed_value)

set_seed()

# Definisci gli URL dei file delle ruote

file_ruote = {

'BA': 'https://raw.githubusercontent.com/Lottopython/estrazioni/refs/heads/main/BARI.txt',

'CA': 'https://raw.githubusercontent.com/Lottopython/estrazioni/refs/heads/main/CAGLIARI.txt',

'FI': 'https://raw.githubusercontent.com/Lottopython/estrazioni/refs/heads/main/FIRENZE.txt',

'GE': 'https://raw.githubusercontent.com/Lottopython/estrazioni/refs/heads/main/GENOVA.txt',

'MI': 'https://raw.githubusercontent.com/Lottopython/estrazioni/refs/heads/main/MILANO.txt',

'NA': 'https://raw.githubusercontent.com/Lottopython/estrazioni/refs/heads/main/NAPOLI.txt',

'PA': 'https://raw.githubusercontent.com/Lottopython/estrazioni/refs/heads/main/PALERMO.txt',

'RM': 'https://raw.githubusercontent.com/Lottopython/estrazioni/refs/heads/main/ROMA.txt',

'TO': 'https://raw.githubusercontent.com/Lottopython/estrazioni/refs/heads/main/TORINO.txt',

'VE': 'https://raw.githubusercontent.com/Lottopython/estrazioni/refs/heads/main/VENEZIA.txt',

'NZ': 'https://raw.githubusercontent.com/Lottopython/estrazioni/refs/heads/main/NAZIONALE.txt'

}

# Variabili globali per salvare le scelte

selected_ruota = None

selected_optimizer = None

selected_loss = None

numeri_interi = []

ultima_estrazione_label = None # variabile globale per la label

data_ultima_estrazione = "Data non disponibile" # inizializza la variabile globale

# Valori consigliati per i parametri di addestramento

suggested_values = {

"Adam": {"epochs": 100, "batch_size": 64, "learning_rate": 0.001},

"RMSprop": {"epochs": 100, "batch_size": 64, "learning_rate": 0.001},

"SGD": {"epochs": 100, "batch_size": 64, "learning_rate": 0.01},

"Adagrad": {"epochs": 100, "batch_size": 64, "learning_rate": 0.01},

"Adadelta": {"epochs": 100, "batch_size": 64, "learning_rate": 1.0},

"Adamax": {"epochs": 100, "batch_size": 64, "learning_rate": 0.002},

"Nadam": {"epochs": 100, "batch_size": 64, "learning_rate": 0.002},

"mse": {"epochs": 100, "batch_size": 64, "learning_rate": 0.001},

"mae": {"epochs": 100, "batch_size": 64, "learning_rate": 0.001},

"msle": {"epochs": 100, "batch_size": 64, "learning_rate": 0.001},

"huber": {"epochs": 100, "batch_size": 64, "learning_rate": 0.001},

"logcosh": {"epochs": 100, "batch_size": 64, "learning_rate": 0.001}

}

# Funzione per caricare i dati della ruota selezionata

def carica_dati(ruota, start_date, end_date):

file_url = file_ruote.get(ruota)

if not file_url:

messagebox.showerror("Errore", "Ruota non trovata.")

return None, None

try:

response = requests.get(file_url)

response.raise_for_status()

data = pd.read_csv(io.StringIO(response.text), header=None, sep="\t")

data.iloc[:, 0] = pd.to_datetime(data.iloc[:, 0], format='%Y/%m/%d')

mask = (data.iloc[:, 0] >= start_date) & (data.iloc[:, 0] <= end_date)

data = data.loc[mask]

numeri = data.iloc[:, 2:].values

if numeri.size == 0:

messagebox.showerror("Errore", "Nessun dato trovato per l'intervallo selezionato.")

return None, None

numeri = numeri / 90.0

return numeri[:-1], numeri[1:]

except requests.exceptions.RequestException as e:

messagebox.showerror("Errore", f"Errore durante il recupero dei dati: {str(e)}")

return None, None

except Exception as e:

messagebox.showerror("Errore", str(e))

return None, None

# Funzione per validare gli input e ottenere le date nel formato corretto

def validate_input():

try:

start_date_obj = entry_start.get_date()

end_date_obj = entry_end.get_date()

start_date = pd.to_datetime(start_date_obj.strftime('%Y/%m/%d'))

end_date = pd.to_datetime(end_date_obj.strftime('%Y/%m/%d'))

if start_date >= end_date:

raise ValueError("La data di inizio deve essere precedente alla data di fine.")

epochs = int(entry_epochs.get())

if epochs <= 0:

raise ValueError("Il numero di epoche deve essere positivo.")

batch_size = int(entry_batch_size.get())

if batch_size <= 0:

raise ValueError("Il batch size deve essere positivo.")

learning_rate = float(entry_learning_rate.get())

if learning_rate <= 0:

raise ValueError("Il learning rate deve essere positivo.")

return start_date, end_date, epochs, batch_size, learning_rate

except ValueError as e:

messagebox.showerror("Errore", str(e))

return None

# Funzione per aggiornare lo stato

def update_status(message):

status_bar.config(text=message)

root.update_idletasks()

# Funzione per valutare la perdita di validazione

def valuta_val_loss(val_loss):

if val_loss < 0.05:

return "Ottimale"

elif val_loss < 0.1:

return "Discreto"

elif val_loss < 0.2:

return "Sufficiente"

else:

return "Scarso"

# Definisci la funzione TensorFlow al di fuori del ciclo

@tf.function(reduce_retracing=True)

def make_prediction_function(model, data):

return model(data, training=False)

# Funzione per avviare la previsione

def avvia_previsione():

global numeri_interi, data_ultima_estrazione

update_status("Inizio della previsione...")

if not selected_ruota or not selected_optimizer or not selected_loss:

update_status("Errore: Selezionare ruota, ottimizzatore e funzione di perdita.")

return

print(Fore.GREEN + f"Ruota Selezionata: {selected_ruota}" + Style.RESET_ALL)

print(Fore.GREEN + f"Ultima Data di Estrazione: {data_ultima_estrazione}" + Style.RESET_ALL)

validation = validate_input()

if not validation:

return

start_date, end_date, epochs, batch_size, learning_rate = validation

update_status("Caricamento dati...")

X, y = carica_dati(selected_ruota, start_date, end_date)

if X is None or y is None:

return

if use_cross_validation.get():

kfold = KFold(n_splits=5, shuffle=True, random_state=42)

val_losses = []

predictions = []

for train_index, val_index in kfold.split(X):

update_status("Addestramento del modello...")

X_train, X_val = X[train_index], X[val_index]

y_train, y_val = y[train_index], y[val_index]

model = Sequential([

Dense(1024, input_shape=(X.shape[1],), activation="relu"),

BatchNormalization(),

Dropout(0.5),

Dense(512, activation="relu"),

BatchNormalization(),

Dropout(0.5),

Dense(256, activation="relu"),

BatchNormalization(),

Dropout(0.5),

Dense(y.shape[1], activation="linear")

])

optimizer = {

'Adam': Adam(learning_rate=learning_rate),

'RMSprop': RMSprop(learning_rate=learning_rate),

'SGD': SGD(learning_rate=learning_rate),

'Adagrad': Adagrad(learning_rate=learning_rate),

'Adadelta': Adadelta(learning_rate=learning_rate),

'Adamax': Adamax(learning_rate=learning_rate),

'Nadam': Nadam(learning_rate=learning_rate)

}[selected_optimizer]

loss_function = {

"mse": MeanSquaredError(),

"mae": MeanAbsoluteError(),

"msle": MeanSquaredLogarithmicError(),

"huber": Huber(),

"logcosh": LogCosh()

}[selected_loss]

metrics_dict = {

"mse": MeanSquaredError(),

"mae": MeanAbsoluteError(),

"msle": MeanSquaredLogarithmicError(),

"huber": Huber(),

"logcosh": LogCosh()

}

metric = metrics_dict[selected_loss]

model.compile(optimizer=optimizer, loss=loss_function, metrics=[metric])

callbacks = []

if use_early_stopping.get():

early_stopping = EarlyStopping(monitor='val_loss', patience=15, restore_best_weights=True)

callbacks.append(early_stopping)

checkpoint = ModelCheckpoint('best_model.keras', monitor='val_loss', save_best_only=True)

reduce_lr = ReduceLROnPlateau(monitor='val_loss', factor=0.5, patience=5, min_lr=1e-6)

callbacks.extend([checkpoint, reduce_lr])

# Addestramento del modello

history = model.fit(X_train, y_train, epochs=epochs, batch_size=batch_size, verbose=1,

validation_data=(X_val, y_val), callbacks=callbacks)

val_losses.append(min(history.history['val_loss']))

model.load_weights('best_model.keras')

next_prediction = make_prediction_function(model, X[-1:])

predictions.append(next_prediction.numpy())

ensemble_prediction = np.mean(predictions, axis=0)

numeri_interi = np.clip(np.round(ensemble_prediction * 90).astype(int), 1, 90).flatten()

media_val_loss = np.mean(val_losses)

valutazione = valuta_val_loss(media_val_loss)

text_output.delete('1.0', tk.END)

text_output.insert(tk.END, f"Dati utilizzati per la previsione:\n{', '.join(map(str, numeri_interi))}\n")

text_output.insert(tk.END, f"Media val_loss in validazione incrociata: {media_val_loss:.4f}\n")

text_output.insert(tk.END, f"Valutazione: {valutazione}\n")

plot_loss(history)

update_status("Previsione completata")

else:

update_status("Addestramento del modello...")

predictions = []

if use_ensemble.get():

num_models = int(spinbox_num_models.get())

for i in range(num_models):

model = Sequential([

Dense(1024, input_shape=(X.shape[1],), activation="relu"),

BatchNormalization(),

Dropout(0.5),

Dense(512, activation="relu"),

BatchNormalization(),

Dropout(0.5),

Dense(256, activation="relu"),

BatchNormalization(),

Dropout(0.5),

Dense(y.shape[1], activation="linear")

])

optimizer = {

'Adam': Adam(learning_rate=learning_rate),

'RMSprop': RMSprop(learning_rate=learning_rate),

'SGD': SGD(learning_rate=learning_rate),

'Adagrad': Adagrad(learning_rate=learning_rate),

'Adadelta': Adadelta(learning_rate=learning_rate),

'Adamax': Adamax(learning_rate=learning_rate),

'Nadam': Nadam(learning_rate=learning_rate)

}[selected_optimizer]

loss_function = {

"mse": MeanSquaredError(),

"mae": MeanAbsoluteError(),

"msle": MeanSquaredLogarithmicError(),

"huber": Huber(),

"logcosh": LogCosh()

}[selected_loss]

metrics_dict = {

"mse": MeanSquaredError(),

"mae": MeanAbsoluteError(),

"msle": MeanSquaredLogarithmicError(),

"huber": Huber(),

"logcosh": LogCosh()

}

metric = metrics_dict[selected_loss]

model.compile(optimizer=optimizer, loss=loss_function, metrics=[metric])

callbacks = []

if use_early_stopping.get():

early_stopping = EarlyStopping(monitor='val_loss', patience=15, restore_best_weights=True)

callbacks.append(early_stopping)

checkpoint = ModelCheckpoint('best_model.keras', monitor='val_loss', save_best_only=True)

reduce_lr = ReduceLROnPlateau(monitor='val_loss', factor=0.5, patience=5, min_lr=1e-6)

callbacks.extend([checkpoint, reduce_lr])

history = model.fit(X, y, epochs=epochs, batch_size=batch_size, verbose=1,

validation_split=0.2, callbacks=callbacks)

model.load_weights('best_model.keras')

next_prediction = make_prediction_function(model, X[-1:])

predictions.append(next_prediction.numpy())

ensemble_prediction = np.mean(predictions, axis=0)

numeri_interi = np.clip(np.round(ensemble_prediction * 90).astype(int), 1, 90).flatten()

else:

model = Sequential([

Dense(1024, input_shape=(X.shape[1],), activation="relu"),

BatchNormalization(),

Dropout(0.5),

Dense(512, activation="relu"),

BatchNormalization(),

Dropout(0.5),

Dense(256, activation="relu"),

BatchNormalization(),

Dropout(0.5),

Dense(y.shape[1], activation="linear")

])

optimizer = {

'Adam': Adam(learning_rate=learning_rate),

'RMSprop': RMSprop(learning_rate=learning_rate),

'SGD': SGD(learning_rate=learning_rate),

'Adagrad': Adagrad(learning_rate=learning_rate),

'Adadelta': Adadelta(learning_rate=learning_rate),

'Adamax': Adamax(learning_rate=learning_rate),

'Nadam': Nadam(learning_rate=learning_rate)

}[selected_optimizer]

loss_function = {

"mse": MeanSquaredError(),

"mae": MeanAbsoluteError(),

"msle": MeanSquaredLogarithmicError(),

"huber": Huber(),

"logcosh": LogCosh()

}[selected_loss]

metrics_dict = {

"mse": MeanSquaredError(),

"mae": MeanAbsoluteError(),

"msle": MeanSquaredLogarithmicError(),

"huber": Huber(),

"logcosh": LogCosh()

}

metric = metrics_dict[selected_loss]

model.compile(optimizer=optimizer, loss=loss_function, metrics=[metric])

callbacks = []

if use_early_stopping.get():

early_stopping = EarlyStopping(monitor='val_loss', patience=15, restore_best_weights=True)

callbacks.append(early_stopping)

checkpoint = ModelCheckpoint('best_model.keras', monitor='val_loss', save_best_only=True)

reduce_lr = ReduceLROnPlateau(monitor='val_loss', factor=0.5, patience=5, min_lr=1e-6)

callbacks.extend([checkpoint, reduce_lr])

history = model.fit(X, y, epochs=epochs, batch_size=batch_size, verbose=1,

validation_split=0.2, callbacks=callbacks)

model.load_weights('best_model.keras')

next_prediction = make_prediction_function(model, X[-1:])

numeri_interi = np.clip(np.round(next_prediction.numpy() * 90).astype(int), 1, 90).flatten()

val_loss = min(history.history['val_loss'])

valutazione = valuta_val_loss(val_loss)

text_output.delete('1.0', tk.END)

text_output.insert(tk.END, f"Dati utilizzati per la previsione:\n{', '.join(map(str, numeri_interi))}\n")

text_output.insert(tk.END, f"Val Loss: {val_loss:.4f}\n")

text_output.insert(tk.END, f"Valutazione: {valutazione}\n")

plot_loss(history)

update_status("Previsione completata")

# Funzione per visualizzare il grafico delle perdite (loss)

def plot_loss(history):

plt.figure(figsize=(10, 6))

plt.plot(history.history['loss'], label='Loss', color='blue')

plt.plot(history.history['val_loss'], label='Val Loss', color='orange')

plt.title('Andamento della Loss durante l\'Addestramento')

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.legend()

plt.grid(True)

plt.show()

# Funzioni per la selezione di ruota, ottimizzatore e funzione di perdita

def seleziona_ruota(ruota):

global selected_ruota, data_ultima_estrazione

selected_ruota = ruota

data_ultima_estrazione = ottieni_data_ultima_estrazione(selected_ruota)

if ultima_estrazione_label: # Verifica che la label sia stata creata

ultima_estrazione_label.config(text=f"Aggiornato alla data del: {data_ultima_estrazione}")

for frame in frame_ruote.values():

frame.configure(style="TFrame")

frame_ruote[ruota].configure(style="Selected.TFrame")

def seleziona_optimizer(opt):

global selected_optimizer

selected_optimizer = opt

for btn in pulsanti_optimizer.values():

btn.configure(style="TButton")

pulsanti_optimizer[opt].configure(style="Selected.TButton")

update_suggested_values()

def seleziona_loss(loss):

global selected_loss

selected_loss = loss

for btn in pulsanti_loss.values():

btn.configure(style="TButton")

pulsanti_loss[loss].configure(style="Selected.TButton")

update_suggested_values()

def update_suggested_values():

if selected_optimizer and selected_loss:

optimizer_values = suggested_values.get(selected_optimizer, {})

loss_values = suggested_values.get(selected_loss, {})

epochs = optimizer_values.get("epochs", loss_values.get("epochs", 100))

batch_size = optimizer_values.get("batch_size", loss_values.get("batch_size", 64))

learning_rate = optimizer_values.get("learning_rate", loss_values.get("learning_rate", 0.001))

entry_epochs.delete(0, tk.END)

entry_epochs.insert(0, str(epochs))

entry_batch_size.delete(0, tk.END)

entry_batch_size.insert(0, str(batch_size))

entry_learning_rate.delete(0, tk.END)

entry_learning_rate.insert(0, str(learning_rate))

# Funzione per ottenere la data dell'ultima estrazione

def ottieni_data_ultima_estrazione(ruota):

url_file = file_ruote.get(ruota)

if not url_file:

return "Data non disponibile"

try:

response = requests.get(url_file)

response.raise_for_status() # Lancia un'eccezione per codici di stato HTTP errati

righe = response.content.decode('utf-8').strip().split('\n')

# Controlla se ci sono righe nel file

if not righe:

return "Data non disponibile (file vuoto)"

ultima_riga = righe[-1] # Ottiene l'ultima riga

data_estrazione = ultima_riga.split('\t')[0] # Divide la riga e prende la data

return data_estrazione

except requests.exceptions.RequestException as e:

print(f"Errore durante la richiesta HTTP: {e}")

return "Data non disponibile (errore HTTP)"

except IndexError as e:

print(f"Errore: Impossibile trovare la data nell'ultima riga del file.")

return "Data non disponibile (errore parsing)"

except Exception as e:

print(f"Errore durante l'elaborazione: {e}")

return "Data non disponibile (errore generico)"

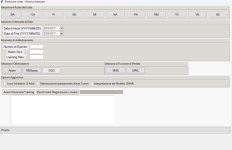

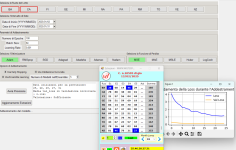

# -------------------------- INTERFACCIA GRAFICA --------------------------

# Inizializza la finestra principale

root = tk.Tk()

root.title("Previsione Lotto")

root.geometry("1200x750")

use_early_stopping = tk.BooleanVar(value=True)

use_cross_validation = tk.BooleanVar(value=True)

use_ensemble = tk.BooleanVar(value=True)

style = ttk.Style()

style.theme_use("clam")

style.configure("TLabel", font=("Helvetica", 10), padding=2, background="#f0f0f0")

style.configure("TButton", font=("Helvetica", 10), padding=2, background="#e0e0e0")

style.configure("TFrame", padding=1, background="#f8f8f8")

style.configure("Selected.TButton", background="lightgreen")

style.configure("Selected.TFrame", background="red")

main_frame = ttk.Frame(root, padding="5")

main_frame.grid(row=0, column=0, sticky=(tk.W, tk.E, tk.N, tk.S))

# --- Selezione della Ruota ---

ruota_frame = ttk.LabelFrame(main_frame, text="Seleziona la Ruota del Lotto", padding="5")

ruota_frame.grid(row=0, column=0, columnspan=2, pady=2, sticky=(tk.W, tk.E))

pulsanti_ruote = {}

frame_ruote = {}

for idx, ruota in enumerate(file_ruote.keys()):

frame = ttk.Frame(ruota_frame, style="TFrame")

frame.grid(row=0, column=idx, padx=2)

pulsanti_ruote[ruota] = ttk.Button(frame, text=ruota, command=lambda r=ruota: seleziona_ruota(r))

pulsanti_ruote[ruota].pack(padx=2, pady=2)

frame_ruote[ruota] = frame

# --- Selezione delle Date con tkcalendar ---

date_frame = ttk.LabelFrame(main_frame, text="Seleziona l'Intervallo di Date", padding="5")

date_frame.grid(row=1, column=0, columnspan=2, pady=2, sticky=(tk.W, tk.E))

ttk.Label(date_frame, text="Data di Inizio (YYYY/MM/DD):").grid(row=0, column=0, padx=2)

entry_start = DateEntry(date_frame, date_pattern='yyyy/mm/dd', width=12)

entry_start.grid(row=0, column=1, padx=2)

ttk.Label(date_frame, text="Data di Fine (YYYY/MM/DD):").grid(row=1, column=0, padx=2)

entry_end = DateEntry(date_frame, date_pattern='yyyy/mm/dd', width=12)

entry_end.grid(row=1, column=1, padx=2)

# --- Parametri di Addestramento ---

training_frame = ttk.LabelFrame(main_frame, text="Parametri di Addestramento", padding="5")

training_frame.grid(row=2, column=0, columnspan=2, pady=2, sticky=(tk.W, tk.E))

ttk.Label(training_frame, text="Numero di Epoche:").grid(row=0, column=0, padx=2)

entry_epochs = ttk.Entry(training_frame, width=10)

entry_epochs.grid(row=0, column=1, padx=2)

ttk.Label(training_frame, text="Batch Size:").grid(row=1, column=0, padx=2)

entry_batch_size = ttk.Entry(training_frame, width=10)

entry_batch_size.grid(row=1, column=1, padx=2)

ttk.Label(training_frame, text="Learning Rate:").grid(row=2, column=0, padx=2)

entry_learning_rate = ttk.Entry(training_frame, width=10)

entry_learning_rate.grid(row=2, column=1, padx=2)

# --- Selezione dell'Ottimizzatore ---

optimizer_frame = ttk.LabelFrame(main_frame, text="Seleziona l'Ottimizzatore", padding="5")

optimizer_frame.grid(row=3, column=0, pady=2, sticky=(tk.W, tk.E))

pulsanti_optimizer = {

"Adam": ttk.Button(optimizer_frame, text="Adam", command=lambda: seleziona_optimizer("Adam")),

"RMSprop": ttk.Button(optimizer_frame, text="RMSprop", command=lambda: seleziona_optimizer("RMSprop")),

"SGD": ttk.Button(optimizer_frame, text="SGD", command=lambda: seleziona_optimizer("SGD")),

"Adagrad": ttk.Button(optimizer_frame, text="Adagrad", command=lambda: seleziona_optimizer("Adagrad")),

"Adadelta": ttk.Button(optimizer_frame, text="Adadelta", command=lambda: seleziona_optimizer("Adadelta")),

"Adamax": ttk.Button(optimizer_frame, text="Adamax", command=lambda: seleziona_optimizer("Adamax")),

"Nadam": ttk.Button(optimizer_frame, text="Nadam", command=lambda: seleziona_optimizer("Nadam"))

}

for idx, btn in enumerate(pulsanti_optimizer.values()):

btn.grid(row=0, column=idx, padx=2)

# --- Selezione della Funzione di Perdita ---

loss_frame = ttk.LabelFrame(main_frame, text="Seleziona la Funzione di Perdita", padding="5")

loss_frame.grid(row=3, column=1, pady=2, sticky=(tk.W, tk.E))

pulsanti_loss = {

"mse": ttk.Button(loss_frame, text="MSE", command=lambda: seleziona_loss("mse")),

"mae": ttk.Button(loss_frame, text="MAE", command=lambda: seleziona_loss("mae")),

"msle": ttk.Button(loss_frame, text="MSLE", command=lambda: seleziona_loss("msle")),

"huber": ttk.Button(loss_frame, text="Huber", command=lambda: seleziona_loss("huber")),

"logcosh": ttk.Button(loss_frame, text="LogCosh", command=lambda: seleziona_loss("logcosh"))

}

for idx, btn in enumerate(pulsanti_loss.values()):

btn.grid(row=0, column=idx, padx=2)

# --- Opzioni Avanzate ---

advanced_frame = ttk.LabelFrame(main_frame, text="Opzioni Avanzate", padding="5")

advanced_frame.grid(row=4, column=0, columnspan=2, pady=2, sticky=(tk.W, tk.E))

check_early_stopping = ttk.Checkbutton(advanced_frame, text="Usa Early Stopping", variable=use_early_stopping)

check_early_stopping.grid(row=0, column=0, padx=5, sticky=tk.W)

check_cross_validation = ttk.Checkbutton(advanced_frame, text="Usa Cross Validation", variable=use_cross_validation)

check_cross_validation.grid(row=0, column=1, padx=5, sticky=tk.W)

check_ensemble = ttk.Checkbutton(advanced_frame, text="Usa Ensemble", variable=use_ensemble)

check_ensemble.grid(row=1, column=0, padx=5, sticky=tk.W)

ttk.Label(advanced_frame, text="Numero di modelli nell'ensemble:").grid(row=2, column=0, padx=5, sticky=tk.W)

spinbox_num_models = ttk.Spinbox(advanced_frame, from_=1, to=10, width=5)

spinbox_num_models.grid(row=2, column=1, padx=5, sticky=tk.W)

# --- Stato e Output ---

status_bar = ttk.Label(main_frame, text="Stato: in attesa...", relief=tk.SUNKEN, anchor='w')

status_bar.grid(row=5, column=0, columnspan=2, sticky=(tk.W, tk.E))

text_output = tk.Text(main_frame, height=10, width=80)

text_output.grid(row=6, column=0, columnspan=2, padx=5, pady=5)

# --- Pulsante di Avvio ---

button_avvia = ttk.Button(main_frame, text="Avvia Previsione", command=avvia_previsione)

button_avvia.grid(row=7, column=0, columnspan=2, pady=10)

# Label ultima estrazione

ultima_estrazione_label = ttk.Label(main_frame, text=data_ultima_estrazione)

ultima_estrazione_label.grid(row=8, column=0, columnspan=2)

root.mainloop()